Evaluation¶

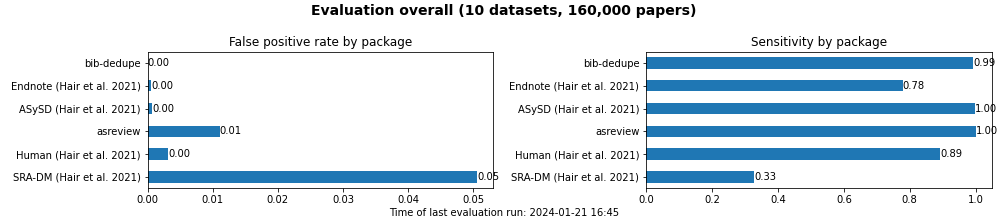

We run a continuous evaluation of tools for duplicate removal in bibliographic datasets. The evaluation script is executed by a GitHub workflow on a weekly basis. Detailed results are exported to a csv file, and aggregated summaries are exported to current_results.md.

Tools are ranked according to false-positive rate. The lower the false-positive rate, the better the tool.

Rank |

Tool |

Type |

Comment |

|---|---|---|---|

1 |

Python library |

|

|

2 |

R package |

|

|

4 |

Python library |

|

|

3 |

Web-based (Ruby) |

|

|

NA |

Python library |

|

|

NA |

Proprietary software |

|

|

NA |

Proprietary software |

|

|

NA |

Endnote |

Proprietary software (local) |

|

NA |

Covidence |

Proprietary software (web-based) |

|

Datasets¶

Dataset |

Reference |

Size (n) |

Status |

|---|---|---|---|

[4] |

1,856 |

Included (based on OSF) |

|

[4] |

1,415 |

Included (based on OSF) |

|

[4] |

1,988 |

Included (based on OSF) |

|

[4] |

1,292 |

Included (based on OSF) |

|

[2] |

8,948 |

Included (based on OSF) |

|

[2] |

79,880 |

Included (based on OSF) |

|

[2] |

1,845 |

Included (based on OSF) |

|

[2] |

3,438 |

Included (based on OSF) |

|

[2] |

53,001 |

Included (based on OSF) |

|

[5] |

7,159 |

Included |

|

TBD |

[1] |

NA |

Requested: 2023-11-14 |

TBD |

[3] |

NA |

Requested: 2023-11-14 |

Note

The SYNERGY datasets are not useful to evaluate duplicate identification algorithms because they only contain IDs, and the associated metadata would have no variance.

Duplicate definition¶

Duplicates are defined as potentially differing bibliographic representations of the same real-world record (Rathbone et al. 2015,[4]). This conceptual definition is operationalized as follows. The following are considered duplicates:

Papers referring to the same record (per definition)

Paper versions, including the author’s original, submitted, accepted, proof, and corrected versions

Papers that are continuously updated (e.g., versions of Cochrane reviews)

Papers with different DOIs if they refer to the same record (e.g., redundantly registered DOIs for online and print versions)

The following are considered non-duplicates:

Papers reporting on the same study if they are published separately (e.g., involving different stages of the study such as pilots and protocols, or differences in outcomes, interventions, or populations)

A conference paper and its extended journal publication

A journal paper and a reprint in another journal

It is noted that the focus is on duplicates of bibliographic records. The linking of multiple records reporting results from the same study is typically done in a separate step after full-text retrieval, using information from the full-text document, querying dedicated registers, and potentially corresponding with the authors [see Higgins et al. sections 4.6.2 and 4.6.2].

The datasets may have applied a different understanding of duplicates. We double-checked borderline cases to make sure that the duplicate pairs in the data correspond to our definition.

Rathbone et al. (2015) [4]: “A duplicate record was defined as being the same bibliographic record (irrespective of how the citation details were reported, e.g. variations in page numbers, author details, accents used or abridged titles). Where further reports from a single study were published, these were not classed as duplicates as they are multiple reports which can appear across or within journals. Similarly, where the same study was reported in both journal and conference proceedings, these were treated as separate bibliographic records.”

Borissov et al. (2022) [1]: “Following a standardized definition [6, 7, 9], we defined one or more duplicates as an existing unique record having the same title, authors, journal, DOI, year, issue, volume, and page number range metadata.”

Evaluation: Dataset model and confusion matrix¶

Record list before de-duplication

ID |

Author |

Title |

|---|---|---|

1 |

John Doe |

Introduction to Data Science |

2 |

|

the art of problem solving |

3 |

Jane A. Smith |

The Art of Problem Solving |

4 |

Jane M. Smith |

the art of problem solving |

5 |

Alex Johnson |

beyond the basics: advanced programming |

Duplicate matrix:

1 |

2 |

3 |

4 |

5 |

|

|---|---|---|---|---|---|

1 |

|||||

2 |

|||||

3 |

X |

||||

4 |

X |

X |

|||

5 |

Components:

ID |

Component |

|---|---|

1 |

c_1 |

2 |

c_2 |

3 |

c_2 |

4 |

c_2 |

5 |

c_3 |

Record list without duplicates:

ID |

Author |

Title |

|---|---|---|

1 |

John Doe |

Introduction to Data Science |

2 |

|

the art of problem solving |

5 |

Alex Johnson |

beyond the basics: advanced programming |

Note: Instead of paper 2, papers 3 or 4 could have been retained. It is not pre-determined which duplicates are retained or removed. That makes the evaluation challenging because the following list would also be correct:

ID |

Author |

Title |

|---|---|---|

1 |

John Doe |

Introduction to Data Science |

4 |

Jane M. Smith |

the art of problem solving |

5 |

Alex Johnson |

beyond the basics: advanced programming |

We use the compare_dedupe_id() method of bib_dedupe.dedupe_benchmark, which compares sets.

Given the set of duplicate IDs did = [2,3,4] as the ground truth, it is evident that only one of the IDs should be retained in the merged list ml (although any selection among the IDs in did would be valid).

If none of the duplicate IDs is retained, there is one false positive (FP), i.e., a record that was erroneously removed as a duplicate. The remaining (len(did)-1) records are counted as true positives (TP).

The first duplicate ID that is retained is counted as the true negative (TN), i.e., the record correctly marked as a non-duplicate. Additional records in ml are marked as false negatives (FN) because they should have been removed. Remaining records from did that are not in ml are marked as true positives (TP) because they were correctly removed from ml.